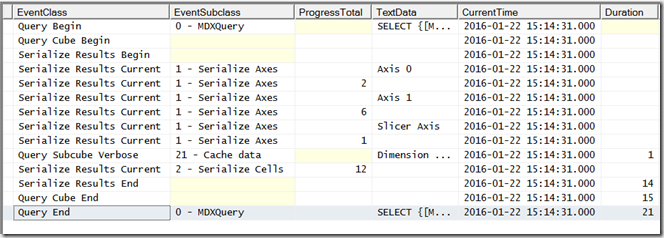

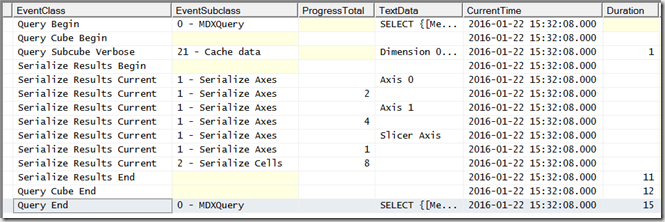

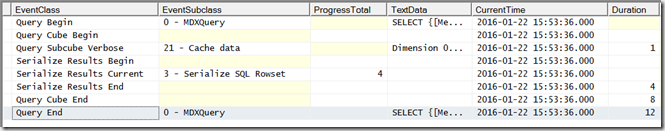

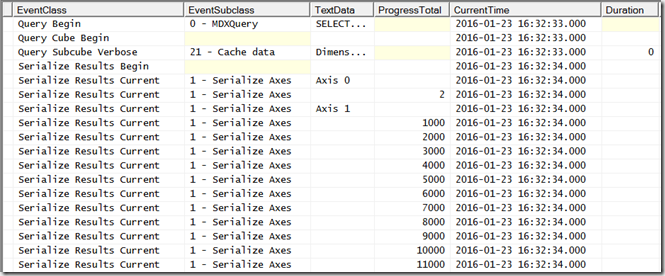

Filtering out empty values is something that SSAS does a lot of during query execution, and it is also a common cause of performance problems. In this series of posts (similar to my series earlier this year on results serialisation) I’ll look at the different types of non empty filtering that can occur in an MDX query, how they can be monitored using Profiler and what you can do to improve their performance.

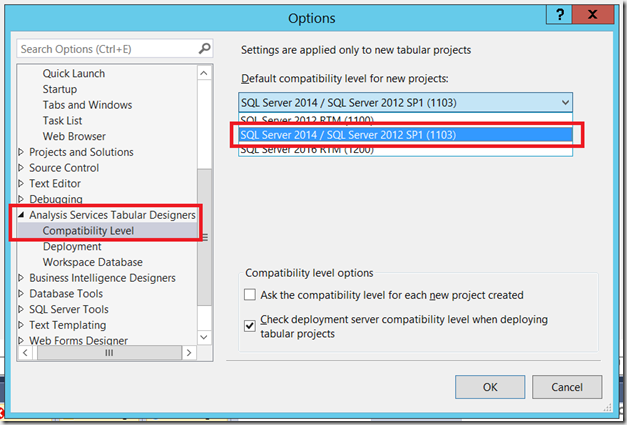

Some of this information has come from an old white paper, but I’ve found that some of what that paper says is now out of date and I’m extremely grateful to Akshai Mirchandani of Microsoft for answering my questions on this subject. Even with the long-suffering Akshai’s help a lot of the information here is based on my own research and therefore potentially incomplete/inaccurate, potentially different for different versions of SSAS (I’m using SSAS 2014 for this series) and could potentially change again in the future, so take due care!

The first question to ask is: what counts as non empty filtering? There are actually several different operations that the engine treats as a non empty filter, a few of which I was surprised by; here are the ones I know about.

1) The NON EMPTY statement

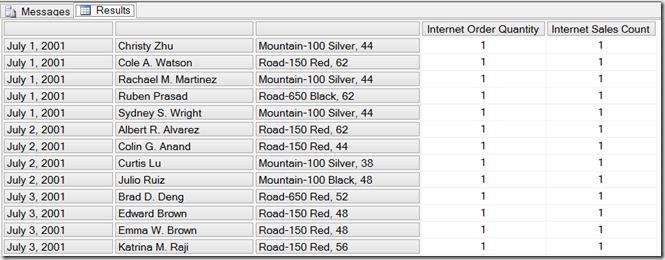

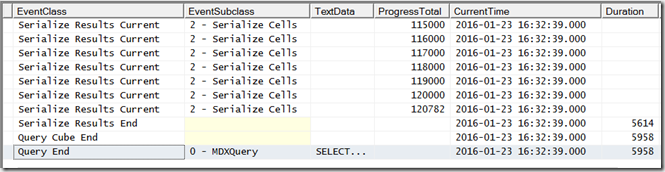

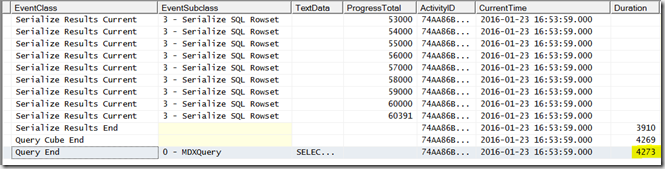

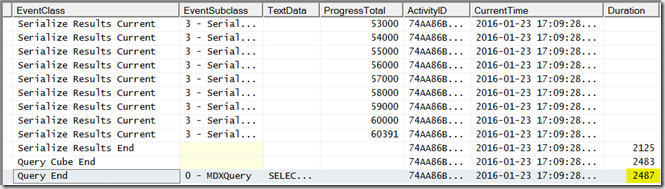

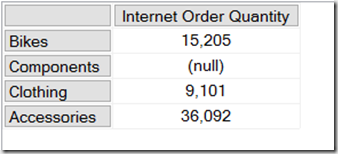

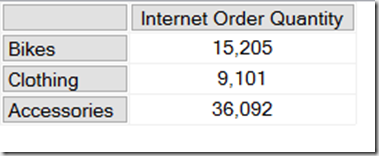

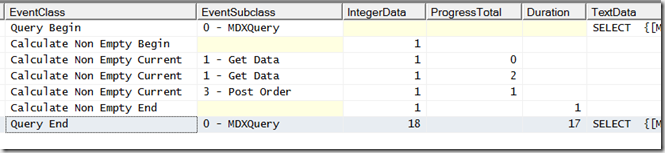

Most MDX queries generated by client tools include a NON EMPTY statement on the rows and columns axis. For example, take a look at the results returned by this MDX query on the Adventure Works cube:

SELECT

{[Measures].[Internet Order Quantity]}

ON 0,

[Product].[Category].[Category].MEMBERS

ON 1

FROM

[Adventure Works]

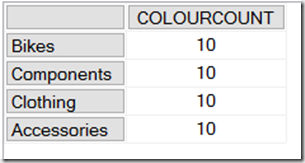

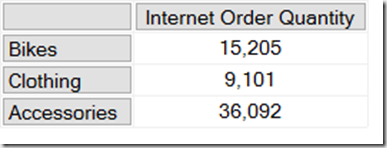

![image image]()

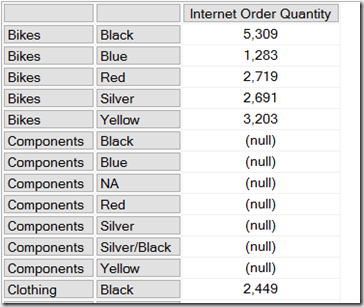

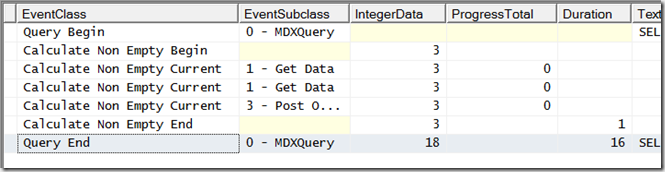

As you can see, the Product Category Components has no data and returns a null. One way to remove the row for Components would be to add a NON EMPTY statement to the rows axis:

SELECT

{[Measures].[Internet Order Quantity]}

ON 0,

NON EMPTY

[Product].[Category].[Category].MEMBERS

ON 1

FROM

[Adventure Works]

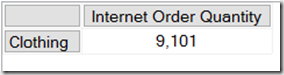

![image image]()

2) The NONEMPTY() function

Often confused with the NON EMPTY statement, but not the same thing: the NON EMPTY statement can only be used on an axis in a SELECT statement, whereas the NONEMPTY() function can be used in any MDX expression. Continuing our example, here’s how to use it to remove the Component category:

SELECT

{[Measures].[Internet Order Quantity]}

ON 0,

NONEMPTY(

[Product].[Category].[Category].MEMBERS,

{[Measures].[Internet Order Quantity]})

ON 1

FROM

[Adventure Works]

The NONEMPTY() function is much more flexible than the NON EMPTY statement but essentially does the same thing – it isn’t any faster in what it does, but it does allow you to make certain assumptions about your data that can improve query performance (more of that later). One thing to remember is to always set the second parameter, because if you don’t you may get unexpected results.

There is also a NONEMPTYCROSSJOIN() function but it is deprecated and you should not be using it – everything that it does can be done more reliably with other functions.

3) Autoexists

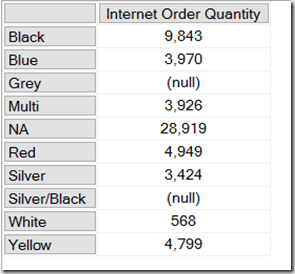

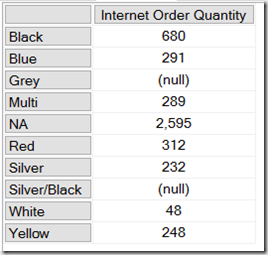

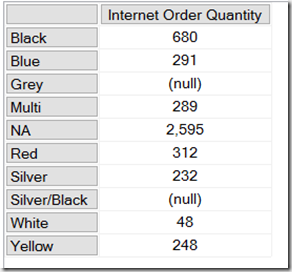

Autoexists is not a feature of the MDX language but rather something that SSAS does automatically to remove tuples from a set that it knows must always be null. It’s described in great detail here, but it’s quite easy to illustrate. We already know from the queries above which categories have data; similarly the following query shows there is data for all colours except Grey and Silver/Black:

SELECT

{[Measures].[Internet Order Quantity]}

ON 0,

[Product].[Color].[Color].MEMBERS

ON 1

FROM

[Adventure Works]

![image image]()

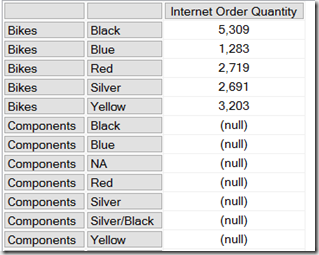

However if you crossjoin every category and every colour on the rows axis, you don’t see every combination of category and colour returned:

SELECT

{[Measures].[Internet Order Quantity]}

ON 0,

[Product].[Category].[Category].MEMBERS

*

[Product].[Color].[Color].MEMBERS

ON 1

FROM

[Adventure Works]

![image image]()

There is no row for the category Bikes and the colour White, for example. This is because the SSAS engine knows from the data in the dimension that no product exists that is both the colour White and in the category Bikes, so it doesn’t return that combination from the crossjoin – that particular tuple could never contain any data so there’s no point returning it. Notice that there are combinations, such as Components/Black, that exist in the dimension and are present on the rows axis but still return null because there is no value for Internet Order Quantity.

It’s important to remember that autoexists only takes place when you are working with sets of members from different hierarchies on the same dimension, never with sets of members from different dimensions.

4) The EXISTS() function and the EXISTING keyword

The EXISTS() function and the EXISTING keyword allow you to take advantage of autoexists for filtering inside your own expressions without having to actually do a crossjoin (there’s another variant of EXISTS() with a third parameter that behaves more like NONEMPTY() but it’s very rarely used so I’m going to ignore it).

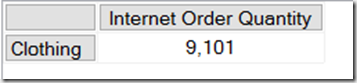

For example, here’s how you can use the EXISTS() function to return all the categories that have a product that is White:

SELECT

{[Measures].[Internet Order Quantity]}

ON 0,

EXISTS(

[Product].[Category].[Category].MEMBERS

, {[Product].[Color].&[White]})

ON 1

FROM

[Adventure Works]

![image image]()

The EXISTING keyword is used within calculations to apply autoexists filtering to a set based on the other hierarchies from the same dimension. The following query contains a calculated measure that counts the number of members on the Color level of the Color hierarchy, and unsurprisingly returns the same value each time it’s called:

WITH

MEMBER MEASURES.COLOURCOUNT AS

COUNT([Product].[Color].[Color].MEMBERS)

SELECT

{MEASURES.COLOURCOUNT}

ON 0,

[Product].[Category].[Category].MEMBERS

ON 1

FROM

[Adventure Works]

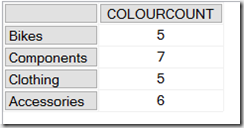

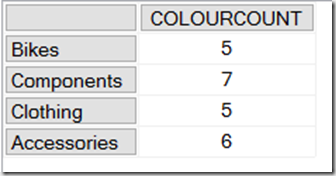

![image image]()

However, if you add the EXISTING keyword just before the set in the calculated measure definition, like so:

WITH

MEMBER MEASURES.COLOURCOUNT AS

COUNT(EXISTING [Product].[Color].[Color].MEMBERS)

SELECT

{MEASURES.COLOURCOUNT}

ON 0,

[Product].[Category].[Category].MEMBERS

ON 1

FROM

[Adventure Works]

Then you’ll see that the calculation now returns the number of members on the Color level of the Color hierarchy after autoexists filtering has been applied; so for example the first line shows there are five distinct colours associated with the Category Bikes:

![image image]()

Summary

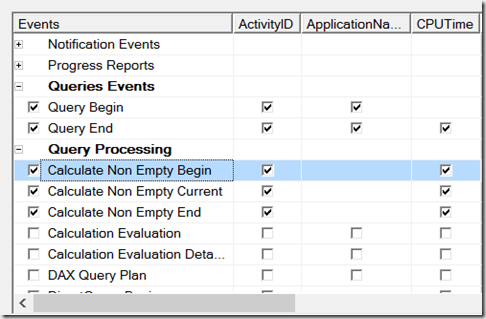

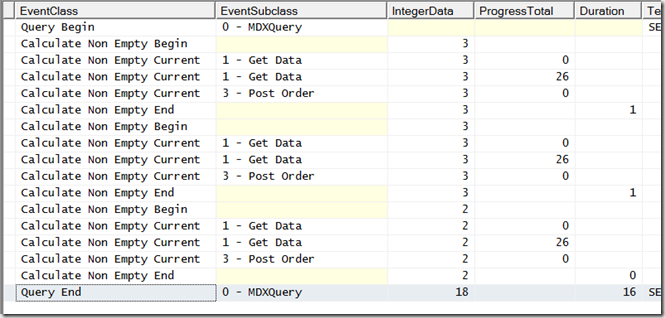

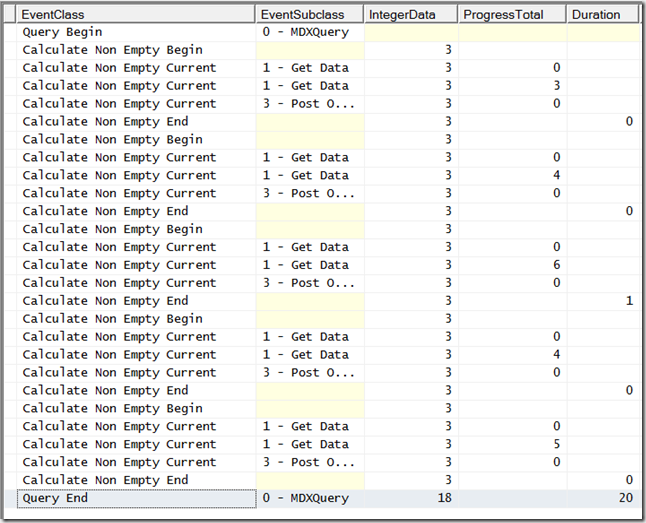

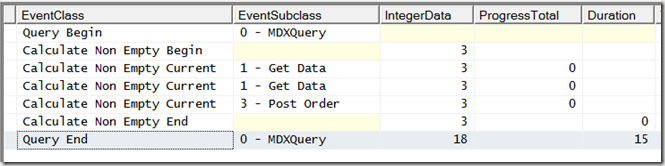

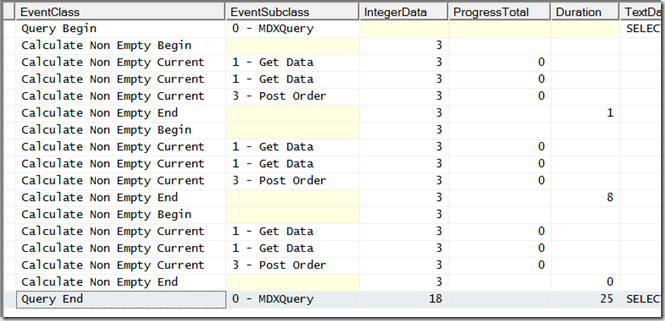

OK, after that somewhat lengthy introduction, in part 2 I’ll show you how to use Profiler to monitor what’s going on inside SSAS when you do all of these different types of non empty filtering.

![]()

![]()